As much as I would like to be, I am not a computer science academic. Not at all.

I took a different path though.

I’ve been spending a big chunk of my days reading, writing, changing, and debugging computer programs for 25 years now. During this time, I’ve earned a technical degree in Data Processing, sat on a Computer Science undergrad course for more than 3 years (before drop out on the 4th year, a semester or so before graduate), and later on ended up pursuing a postgraduate degree in Data Science & Big Data, after earn a bachelor’s in Nutrition.

In those last 25 years, you better bet I’ve read a fair bit of books, articles, blogs, and whatnot, in an honest effort to educate myself. Still, I feel myself so undereducated to offer opinions outside my field of expertise ⎼ i.e. architect software systems for the Internet.

That’s why I always look for people who know better than me. So I don’t embarrass myself for free on the Internet. It might not be cool but it is intellectually honest. I’ll take it.

LLM Hype

As of late there has been a hype on the subject of LLM (large language models, e.g. GPT-3), especially ChatGPT. You must be living under a rock to have not heard about it. This thing is everywhere. People are freaking their minds about it. Some loving, some hating, and everything in between, I guess.

Here I am.

Even though I got a postgraduate degree in the subject of data science, I’m far away from being an expert in LLM. Far far away. Not even in the sight of human eyes. So my thoughts on it are based on my observations throughout the years and a fair share of real world experience as a software engineer. Take it with a grain of salt.

What do I think about it?

I think it might be a handy tool in your programmer toolbox. Have it there, as you have Google and Stack Overflow. That’s it. Simple as that.

I mean, let me elaborate a little more on this claim.

When I first got into programming, I had to buy books and magazines or borrow them from school and public libraries; also, I had a good buddy at work that taught me a lot about Clipper, and that was it. Then I discovered the online discussion forums (e.g. GUJ). And then I got to know about and attended to user groups meetings (e.g. SouJava). And then it was Stack Overflow, GitHub, and the plethora of technical content there is all around the Internet ⎼ you know what I’m talking about, just Google whatever about the most esoteric programming language and there you have it.

Those things weren’t mutually excludents, they were adding up one upon another, like bricks of a building. Or should I say a skyscraper? Go figure.

Anyway, I guess what I’m trying to say is that, even though I’m able to search anything on Stack Overflow (or rather on Google and then point & click it), read blogs, watch all sorts of interesting talks on YouTube, and so on and so forth, I still buy books and read through them. One thing does not exclude the other.

The same thing applies to LLM tools.

Be An Education-First Programmer

I would like to make a point here: I am a firm believer in “quality” education. And by that I mean attending formal university courses and reading “fleshy” books.

I know it is not a popular opinion nowadays, with all the bootcamps, quick video courses, and nano contents being heavily advertised out there, as if they are the penicillin of the 21st century, but honestly I couldn’t care less. Solidifying knowledge that passes the test of time calls for a strong education. So take your time, do the hard work, get past the quick tutorial, and get educated yourself.

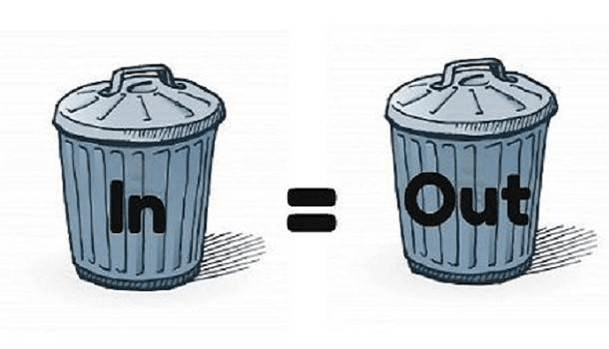

Keep in mind that with just shallow knowledge you might not even be able to discern whether a Stack Overflow answer is right or wrong; and if it’s right, and if it works as expected, why it does. And in this case, what would you do if it doesn’t work? Trial and error ad nauseam, right? Which brings us to an indisputable fact.

Nobody likes that guy/gal in the office that asks questions all the time and wants to get every piece of information chewed up to just swallow it in without having to drop a sweat. I certainly don’t.

Let me tell you: copy & paste driven programming has been a thing for quite a long time, at least since we could find candidate solutions for most computing problems we search through the Internet. This is nothing new. People have been doing this thing for ages now. Much more after the advent of open source software and powerful search engines. And there is nothing wrong with copy & paste code from the Internet. Nothing. Well, at least until it does.

After all, we can copy & paste small snippets of code here and there, sample usages of APIs that we are not too familiar with, or that we haven’t used yet, or that we are getting a quick start. We all have done that and will still do. (Regex? Cron? Yeah, I feel you.) However, if we do not have a solid base on programming and software design, if we do not take our time to understand what we’re doing, we will end up in a frustrating loop of trial and error. Worse than that, folks, we will end up introducing hard to find bugs, performance issues, and security vulnerabilities in our software ⎼ which by the way, most of the time isn’t even our software but our employers’ software. Think about that for a second.

There is much more in software development than writing an SQL query, or building an API endpoint, or calling a remote service from a mobile app, or coding a depth-first search. A complete software solution is made of much more than that. Software engineering, as a field, is what’s concerned about that. Hence my point here is, if you don’t know what to ask for, what sort of answers do you think you’re gonna get? And will you be able to discern the right answer for your particular case?

When you read through answers on Stack Overflow, you better be quite sure about what you are looking for, to be reasonably assertive ⎼ there is a lot of junk content out there too, mind you; and they don’t come with a label “garbage”. Then you must take into account all the context of the original question and the provided answers, skipping the garbage here and there, before you can make a decision of copy & paste some code snippet as-is or write your own solution based on the built knowledge ⎼ e.g. the specific problem you’re trying to solve owns its particular edge cases. And this very thing, over and over, will add up knowledge to you.

See where we’re going? See my point here? See how ChatGPT can be a trap? You ask it, you get an answer. An answer so confident that if you don’t have a wide and solid knowledge of programming, you buy absolute horse crap without even knowing it. I’ve seen quite a lot of that with friends on Twitter and whatnot. Even with widely known algorithms.

As such, this “much more” is the thing we should care about (beside the basics) and is what we must focus on (provided we got the basics in place). Which brings us to another merciless fact.

We don’t know what we don’t know. And the only thing we can do about that is acquiring new knowledge, again and again ⎼ broad first, depth second.

This way, hopefully, we will know what we’re looking for and have at least a grasp of the answer when we find it.

– Broad knowledge gives us a direction to go search for answers;

– Deep knowledge helps us discern among found answers.

Okay. Cool. But how do we do that?

For broadening your knowledge of what’s out there to know, I think blogs and YouTube videos are great. They are short content that you can consume on a daily basis without breaking a sweat. But for deep solid knowledge, in my opinion (N=1), there is nothing better than reading books and official pieces of documentation. And if you can, if you haven’t yet, get yourself a seat (physical or online) on a university course (graduate or postgraduate accordingly).

Again, not a popular opinion. But I’m not popular myself, so who cares?

Enters LLM tools

Nonetheless I have several unpopular opinions, I think this one will hit the parade: LLM might be an awesome tool to help you gain solid knowledge. How is that? I’ll tell you how.

Start by asking ChatGPT what has to be known about, let’s say, mobile apps development. Then ask for short tutorials and YouTube videos to get the big picture. Next, ask for highly recommended books and lectures about it. Because ultimately you want to read several deep articles, from different authors, with different points of view, to get a solid understanding of the topic you’re studying.

That’s for one thing. Another thing it might be helpful is when you’re reading through a book and get stuck by lack of knowledge of a given required subject. Ask ChatGPT to sum the said subject up for you, so you get a base before going ahead. Same thing you would do with Google, reading one or two sources but quick and right to the point. Yet, afterwards, go ahead and get deep into the said subject if it’s something important for the general matter you’re studying. It’s okay to do that.

And yet another circumstance it might be very helpful is while you’re up to develop a software application for a given domain you’re not too familiar with all the ins and outs. I saw that the other day and found it fairly smart.

Let’s say you’re going to develop a fitness mobile app, because you’re into fitness and are super thrilled with the idea of helping people get healthy and everything. Okay. But you don’t know all the apps there are out there, and their most loved features, and how they are monetized, etc. These are very objective questions. So you can ask these questions to ChatGPT to get started quickly. You ask about app requirements. You ask it to, for example, write the welcome email you’re gonna send to new subscribers of your awesome fitness app, because you’re not particularly good at this. Right. Now what? If you understood what I’ve talked about until this point, you already have a good sense of what you should do next. You improve it, you make it better, you put on your special sauce. In other words, break the inertia, which is the arguably most important thing in this case, then make it particularly special ⎼ otherwise, all you’ll have is generic stuff to sell out. Not cool, I guess.

If you get access to GitHub Copilot and it’s no harm to use it on your work, you can ask it to scaffold pieces of code to you, like a method to parse a file, a repetitive test case, or whatnot. You know, that boring stuff that you have to do every now and then and can’t be fully avoided with proper code design and smart libraries. Nothing wrong with that. You don’t get extra points not doing so ⎼ as long as there is no policy in place where you work.

There might be many other similar applications, mind you. Think about that.

Oh, wait. I thought about the greatest benefit this could bring us: Do you know those guys that like to post on forums asking for solutions to their schoolwork? Like, you know, you read the question and it’s almost the literal assignment statement. You’ve probably seen that before. Yeah, now they can ask ChatGPT for that and leave us alone. Everyone is happy about it.

Will LLM replace human programmers?

No. I don’t think so. I am very skeptical about “X is going to replace human programmers”. Since I started with programming in 1997, I’ve already heard my fair bit about fads like that.

It will likely change the way we get educated on programming, how we overcome blocks when we get stuck while programming, how we deal with boring stuff, but replace us, homo sapiens, for more than CRUDs and mundane widely known apps, no, I am very skeptical about that. I might be wrong though.

And know, finally, this article comes full circle with what got me motivated to write it down.

Yesterday I had the pleasure to stumble on an amazing article by Amy J. Ko, which was a real joy to read. First because I agree with mostly everything she wrote there. Second, because unlike me, she is an academic and has been following this subject matter for more than a decade. So she definitely has one thing or two to collaborate in this whole discussion without embarrassing herself.

So do yourself a favor, cut through the hype, and read these outstanding articles to get yourself a better sense of the topic from different point of views:

– Large language models will change programming… a little

– Large language models will change programming … a lot

There is no TLDR; there. Take your time, enjoy the ride.

Now to end this long piece with a funny thing: I used to watch people make fun of academics all the time, saying how academia is far away from real world software development, and then, all of sudden, people are amazed and freaking out about a thing brought to life straight from academia research. What a big piece of irony. What a good time to be an academic.

Anyway, see you next time, folks.

Be careful with the hype.